ARA-C01 Exam Dumps - SnowPro Advanced: Architect Certification Exam

Searching for workable clues to ace the Snowflake ARA-C01 Exam? You’re on the right place! ExamCert has realistic, trusted and authentic exam prep tools to help you achieve your desired credential. ExamCert’s ARA-C01 PDF Study Guide, Testing Engine and Exam Dumps follow a reliable exam preparation strategy, providing you the most relevant and updated study material that is crafted in an easy to learn format of questions and answers. ExamCert’s study tools aim at simplifying all complex and confusing concepts of the exam and introduce you to the real exam scenario and practice it with the help of its testing engine and real exam dumps

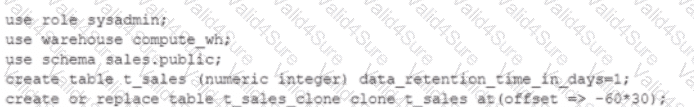

An Architect runs the following SQL query:

How can this query be interpreted?

A user is executing the following command sequentially within a timeframe of 10 minutes from start to finish:

What would be the output of this query?

A company is using Snowflake in Azure in the Netherlands. The company analyst team also has data in JSON format that is stored in an Amazon S3 bucket in the AWS Singapore region that the team wants to analyze.

The Architect has been given the following requirements:

1. Provide access to frequently changing data

2. Keep egress costs to a minimum

3. Maintain low latency

How can these requirements be met with the LEAST amount of operational overhead?

Which data models can be used when modeling tables in a Snowflake environment? (Select THREE).

An Architect is troubleshooting a query with poor performance using the QUERY function. The Architect observes that the COMPILATION_TIME Is greater than the EXECUTION_TIME.

What is the reason for this?

Which Snowflake architecture recommendation needs multiple Snowflake accounts for implementation?

What is the MOST efficient way to design an environment where data retention is not considered critical, and customization needs are to be kept to a minimum?

A company’s daily Snowflake workload consists of a huge number of concurrent queries triggered between 9pm and 11pm. At the individual level, these queries are smaller statements that get completed within a short time period.

What configuration can the company’s Architect implement to enhance the performance of this workload? (Choose two.)