DP-300 Exam Dumps - Administering Relational Databases on Microsoft Azure

Searching for workable clues to ace the Microsoft DP-300 Exam? You’re on the right place! ExamCert has realistic, trusted and authentic exam prep tools to help you achieve your desired credential. ExamCert’s DP-300 PDF Study Guide, Testing Engine and Exam Dumps follow a reliable exam preparation strategy, providing you the most relevant and updated study material that is crafted in an easy to learn format of questions and answers. ExamCert’s study tools aim at simplifying all complex and confusing concepts of the exam and introduce you to the real exam scenario and practice it with the help of its testing engine and real exam dumps

You have an Azure virtual machine named Server1 that runs Windows Server 2022. Server! contains an instance of Microsoft SQL Server 2022 named SQL1 and a database named DB1.

You create a master key in the master database of SQL1.

You need to create an encrypted backup of DB1.

What should you do?

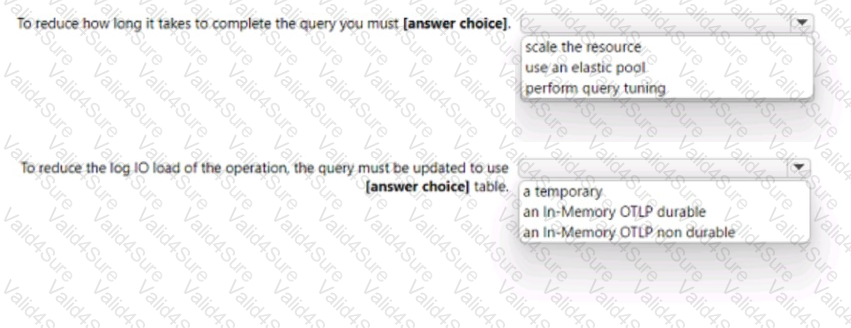

You have an Azure SQL database named that contains a table named Table1.

You run a query to bad data into Table1.

The performance Of Table1 during the load operation are shown in exhibit.

You have an Azure Synapse Analytics workspace named WS1 that contains an Apache Spark pool named Pool1.

You plan to create a database named DB1 in Pool1.

You need to ensure that when tables are created in DB1, the tables are available automatically as external tables to the built-in serverless SQL pool.

Which format should you use for the tables in DB1?

You need to use an Azure Resource Manager ARM) template to deploy an Azure virtual machine that will host a Microsoft SQL Server instance. The solution must maximize disk I/O

performance for the SQL Server database and log files

How should you complete the template? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

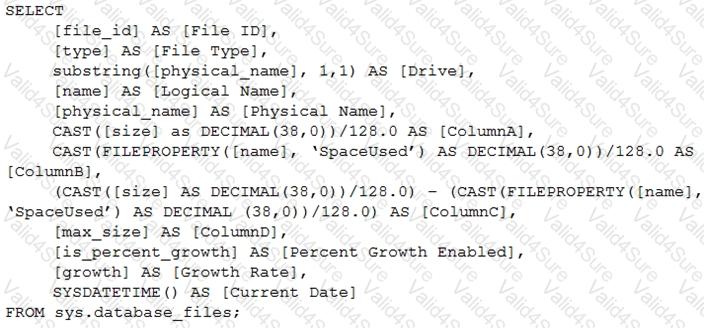

You have the following Transact-SQL query.

Which column returned by the query represents the free space in each file?

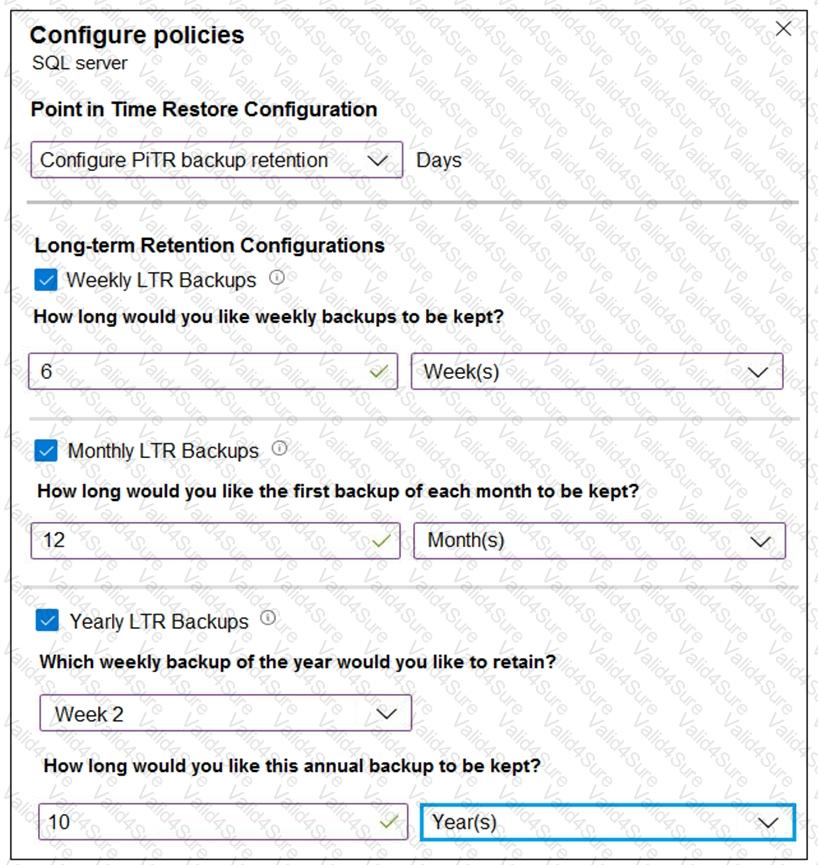

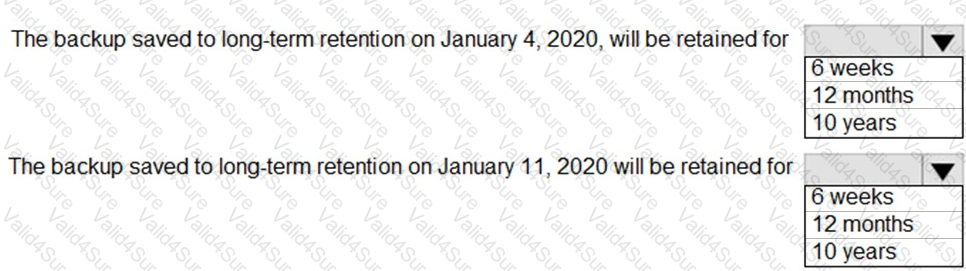

You configure a long-term retention policy for an Azure SQL database as shown in the exhibit. (Click the Exhibit tab.)

The first weekly backup occurred on January 4, 2020. The dates for the first 10 weekly backups are:

January 4, 2020

January 11, 2020

January 18, 2020

January 25, 2020

February 1, 2020

February 8, 2020

February 15, 2020

February 22, 2020

February 29, 2020

March 7, 2020

Use the drop-down menus to select the answer choice that completes each statement based on the information presented in the graphic.

NOTE: Each correct selection is worth one point.

You have a new Azure SQL database. The database contains a column that stores confidential information.

You need to track each time values from the column are returned in a query. The tracking information must be

stored for 365 days from the date the query was executed.

Which three actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

You have an Azure Synapse Analytics Apache Spark pool named Pool1.

You plan to load JSON files from an Azure Data Lake Storage Gen2 container into the tables in Pool1. The structure and data types vary by file.

You need to load the files into the tables. The solution must maintain the source data types.

What should you do?